The Role of Data Scientists in a Tech Driven Supply Chain

The Evolving Landscape of Supply Chain Management

Supply Chain Resilience in a Dynamic World

The global supply chain is constantly evolving, adapting to shifting geopolitical landscapes, technological advancements, and unexpected disruptions. Understanding and proactively addressing these changes is crucial for businesses to maintain operational efficiency and profitability. This evolution necessitates a move beyond traditional, linear models towards more agile, resilient, and adaptable systems. The focus has shifted from simply minimizing costs to optimizing the entire supply chain ecosystem, including risk mitigation and sustainable practices.

Supply chain resilience is no longer a nice-to-have but a necessity. Businesses are recognizing the importance of diversifying their suppliers, building stronger relationships with key partners, and implementing robust contingency plans to navigate disruptions like pandemics, natural disasters, and geopolitical tensions. This emphasis on resilience underscores the need for proactive risk assessment and scenario planning to anticipate and mitigate potential disruptions. The ability to quickly respond to unforeseen events and maintain operations is paramount in today's volatile environment.

The Role of Technology in Modern Supply Chains

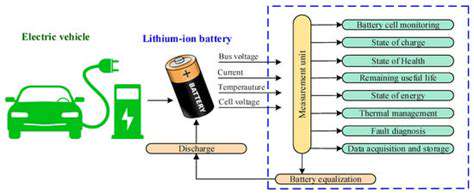

Technology is revolutionizing the way supply chains operate. From sophisticated software solutions for inventory management and logistics to real-time tracking and data analytics, technology plays a vital role in optimizing processes, improving visibility, and enhancing decision-making. The implementation of these technologies enables businesses to gain a deeper understanding of their supply chain performance, identify bottlenecks, and streamline operations.

Advanced technologies such as AI and machine learning are transforming supply chain management by enabling predictive modeling and proactive risk identification. This proactive approach allows businesses to anticipate potential disruptions and adjust strategies accordingly. Furthermore, the use of automation and robotics is optimizing various stages of the supply chain, from warehousing and order fulfillment to transportation and delivery.

Sustainable Practices and Ethical Considerations

Sustainable practices are becoming increasingly important in supply chain management. Companies are recognizing the need to minimize their environmental footprint and adopt ethical sourcing strategies. This includes considering the environmental impact of materials, transportation methods, and manufacturing processes. Companies are striving to reduce waste, conserve resources, and promote environmentally friendly practices throughout their supply chains.

Ethical considerations are also paramount. Fair labor practices, responsible sourcing, and transparency in supply chains are becoming crucial factors in building trust and maintaining brand reputation. Consumers are increasingly demanding ethical sourcing and environmental responsibility, placing pressure on businesses to adopt sustainable practices. Companies that prioritize these aspects are more likely to attract and retain customers, fostering long-term success and positive brand image.

Data-Driven Insights for Predictive Analysis

Data Collection and Preparation

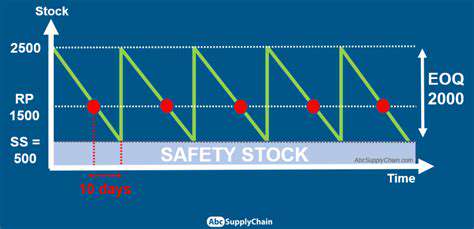

A crucial first step in achieving predictive insights is the meticulous collection and preparation of data. This involves identifying the relevant data sources, ensuring data quality, and transforming the data into a usable format. Thorough data cleaning is essential to eliminate inconsistencies and errors that could skew the results. This process can include handling missing values, outliers, and standardizing formats, ultimately leading to more accurate and reliable predictions.

Data preparation also encompasses selecting the appropriate variables for analysis. Understanding which variables correlate with the desired outcome is paramount. Careful consideration must be given to the potential impact of irrelevant variables, which could lead to misleading or inaccurate conclusions. The selection of relevant and reliable data is fundamental to producing robust predictive models.

Predictive Modeling Techniques

Several statistical and machine learning techniques are available for developing predictive models. Choosing the right technique depends on the nature of the data and the specific predictive task. Regression analysis, for instance, is useful for forecasting continuous variables, while classification models are better suited for categorizing data.

Machine learning algorithms, including decision trees, support vector machines, and neural networks, can also be employed to build sophisticated predictive models. Each technique has its strengths and weaknesses, and selecting the most appropriate one requires careful consideration of the dataset's characteristics and the desired level of accuracy.

Model Evaluation and Validation

After developing a predictive model, it's critical to evaluate its performance and validate its accuracy. This involves using appropriate metrics to assess the model's ability to predict outcomes correctly. Common metrics include accuracy, precision, recall, and F1-score for classification models, and root mean squared error (RMSE) and R-squared for regression models. Evaluating model performance allows for identification of areas for improvement and ensures the reliability of the predictive insights generated.

Validation techniques, such as cross-validation, are crucial to assess the model's generalizability. These methods help prevent overfitting, a common issue where the model performs well on the training data but poorly on new, unseen data. Rigorous validation ensures the model's ability to provide reliable predictions in real-world scenarios.

Deployment and Monitoring

Deploying a predictive model into a production environment requires careful planning and execution. This involves integrating the model into existing systems and ensuring its seamless operation. The model's performance needs to be continuously monitored to detect any degradation in accuracy or unexpected changes in the data. This ongoing monitoring is essential to maintain the model's effectiveness and ensure its continued relevance in a dynamic environment.

Business Applications and Insights

Predictive insights derived from data analysis can be applied across a wide range of business functions. For example, in marketing, predictive models can identify potential customers and personalize marketing campaigns. In finance, predictive models can be used to assess credit risk and forecast market trends. In healthcare, predictive models can help identify patients at high risk of developing certain diseases. These applications highlight the significant potential of data-driven insights to enhance decision-making and drive business success.

Ultimately, the insights derived from predictive modeling should be translated into actionable strategies. Transforming data into meaningful insights requires a deep understanding of the business context and a strategic approach to implementation.

Improving Decision-Making Through Statistical Modeling

Understanding the Fundamentals of Statistical Modeling

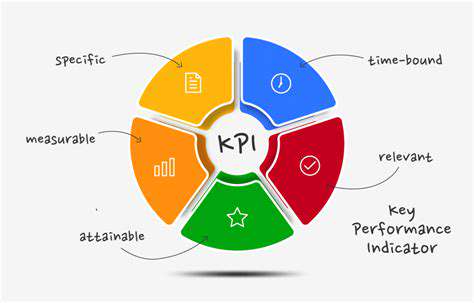

Statistical modeling is a powerful tool for extracting insights from data and making informed decisions. It involves using mathematical and statistical techniques to create representations of real-world phenomena. This process allows data scientists to identify patterns, quantify relationships, and predict future outcomes. A fundamental understanding of these techniques is crucial for effectively leveraging data to improve decision-making processes, whether in business, scientific research, or other fields.

The core principles of statistical modeling, such as hypothesis testing, regression analysis, and probability distributions, provide a framework for evaluating the reliability and significance of findings. A strong foundation in these principles is essential to avoid drawing misleading conclusions based on incomplete or misinterpreted data.

Data Collection and Preparation for Modeling

Effective statistical modeling relies heavily on the quality and relevance of the data used. Data collection methods must be carefully planned and executed to ensure representativeness and accuracy. This includes defining clear objectives, selecting appropriate data sources, and establishing robust data collection procedures. Furthermore, data preparation is critical, encompassing tasks like data cleaning, transformation, and feature engineering to ensure data integrity and compatibility with the chosen modeling techniques.

Data cleaning involves identifying and correcting errors, inconsistencies, and missing values. Feature engineering involves creating new variables or transforming existing ones to improve model performance. Proper data preparation is a crucial step that significantly impacts the success of the modeling process.

Choosing the Right Statistical Model

Selecting the appropriate statistical model is essential for achieving accurate and meaningful results. The choice depends on the nature of the data, the research question, and the desired outcome. Data scientists must consider various models, including linear regression, logistic regression, time series analysis, and machine learning algorithms, each with its own strengths and limitations.

Careful consideration of factors such as the type of data (categorical, numerical, time-series), the relationship between variables, and the desired level of prediction accuracy are crucial in selecting the most suitable model for a given problem.

Model Validation and Evaluation

A crucial aspect of statistical modeling is validating the model's accuracy and reliability. This involves using various techniques to assess how well the model fits the data and generalizes to unseen data. These techniques include cross-validation, hold-out samples, and performance metrics such as R-squared, RMSE, and precision/recall.

The validation process helps identify potential biases or overfitting issues. Appropriate metrics and techniques allow data scientists to make informed judgments about the model's performance and refine it if necessary.

Interpreting Model Results and Communicating Insights

Once a model is built and validated, the next step involves interpreting the results and communicating the findings effectively. This involves understanding the coefficients, p-values, and other statistical measures to draw meaningful conclusions about the relationships between variables. Clear and concise communication of these insights is vital for stakeholders to understand and utilize the results for decision-making.

Data visualization plays a key role in communicating the findings. Visual representations of the model's results can enhance understanding and facilitate better decision-making.

Applying Statistical Modeling in Real-World Scenarios

Statistical modeling finds widespread application in diverse fields. In finance, it's used for risk assessment and portfolio optimization. In healthcare, it's employed to predict disease outbreaks and personalize treatment plans. In marketing, it aids in customer segmentation and targeted advertising campaigns. These examples demonstrate the versatility and impact of statistical modeling in real-world problem-solving.

The Role of Data Scientists in Implementing Statistical Models

Data scientists play a crucial role in the entire process, from data collection and preparation to model validation and interpretation. Their expertise in statistical modeling, programming, and data analysis is essential for effectively implementing these models in various contexts. Furthermore, data scientists need to effectively communicate their findings to non-technical audiences, ensuring that the insights derived from the models are actionable and impactful.

Data scientists work with diverse stakeholders to translate complex statistical models into actionable strategies and decisions.

Read more about The Role of Data Scientists in a Tech Driven Supply Chain

Hot Recommendations

- Offshore Wind for Industrial Power

- Agrivoltaics: Dual Land Use with Solar Energy Advancements: Sustainable Farming

- Hydrogen as an Energy Storage Medium: Production, Conversion, and Usage

- Utility Scale Battery Storage: Successful Project Case Studies

- The Role of Energy Storage in Grid Peak Shaving

- The Role of Startups in Renewable Energy

- The Role of Blockchain in Decentralization of Energy Generation

- The Future of Wind Energy Advancements in Design

- Synchronous Condensers and Grid Inertia in a Renewable Energy Grid

- Corporate Renewable Procurement for Government Agencies